Adapting Openshift Origin for High load

Openshift Origin is a Platform as a Service software platform which enables us to horizontally scale applications and also manage multiple applications in one cluster. One openshift node could contain many applications, the default settings allows for 100 gears (which could be 100 different applications or may be only 4 applications each with 25 gears). Each gear contains a separate apache instance. This post would describe adjustments that I have done on an Openshift M4 cluster that are deployed using the definitive guide. Maybe I really should upgrade the cluster to newer version, but we are currently running production load in this cluster.

The Node architecture

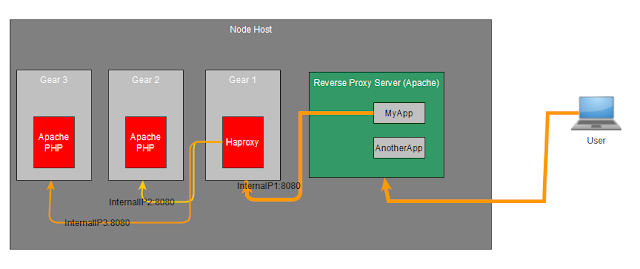

Load balancing in an Openshift application is done by haproxy. The general application architecture is shown below (replace Java with PHP for cases of PHP-based application) (ref : Openshift Blog : How haproxy scales apps).

What is not shown above is that the user actually connects to another Apache instance running in the node host, working as a Reverse-Proxy Server, this could be seen in below image (taken from Redhat Access : Openshift Node Architecture):

Using Haproxy as a load balancer means the 'Gear 1' in the picture above is replaced by a haproxy instance which distributes loads into another gears. I tried to draw using yEd with this diagram as a result :

Haproxy limits

First, the haproxy cartridge specified 128 as session limit. What if I planned to have 1000 concurrent users ? If the users are using IE, each could create 3 concurrent connection, totaling 3000 connections. So this need to be changed.

I read somewhere that haproxy is capable proxying to tens of thousands connections, so I changed the maxconn from 256 and 128 into 25600 and 12800, respectively. The file need to be changed is haproxy/conf/haproxy.cfg in the application primary (front) gear, lines 28 and 53. Normal user is allowed to make this change (rhc ssh first to your gear).

It seems that open files limit also need to be changed. The indication is these error messages are shown in app-root/logs/haproxy.log :

[WARNING] 018/142729 (27540) : [/usr/sbin/haproxy.main()] Cannot raise FD limit to 51223.

[WARNING] 018/142729 (27540) : [/usr/sbin/haproxy.main()] FD limit (1024) too low for maxconn=25600/maxsock=51223. Please raise 'ulimit-n' to 51223 or more to avoid any trouble.

To fix this, change configuration file /etc/security/limits.conf as root, add this line:

* hard nofile 60000

And stop-start your gear after the change.

Preventing port number exhaustion

High number of load might cause the openshift node to run out of port numbers. The symptom is this error message in /var/log/httpd/error_log (only accessible to root) :

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

[Mon Feb 01 10:35:37 2016] [error] (99)Cannot assign requested address: proxy: HTTP: attempt to connect to 127.4.238.2:8080 (*) failed

Such error messages are related to bug https://bugzilla.redhat.com/show_bug.cgi?id=1085115, which give us a clue of what really happened. On my system, I used mod_rewrite as reverse proxy module, which have the shortcoming of not reusing socket connections to backend gears. Socket open and closing if done too often would kept many sockets in TIME_WAIT state, which port number by default will not be used for 2 minutes. So one workaround is to enable net.ipv4.tcp_tw_reuse, add this line to the file /etc/sysctl.conf as root:

net.ipv4.tcp_tw_reuse = 1

And to force in effect immediately, do this in the shell prompt :

sysctl -w net.ipv4.tcp_tw_reuse=1

Conclusion

These changes, done properly, will adjust software limits in the openshift node, enabling the openshift node to receive high amount of requests and/or concurrent connections. Of course you still need to design your database queries to be quick and keep track of memory usage. But these software limits if left unchanged might became a bottleneck for your Openshift application performance.

Comments